Browser extensions with 8 million users collect extended AI conversations

THANKS, GOOGLE AND MICROSOFT

Browser extensions with 8 million users collect extended AI conversations

The extensions, available for Chromium browsers, harvest full AI conversations over months.

Credit:

Getty Images

Credit:

Getty Images

Story text

Size

Small

Standard

Large

Width

*

Standard

Wide

Links

Standard

Orange

* Subscribers only

Credit:

Getty Images

Credit:

Getty Images

Story text

Size

Small

Standard

Large

Width

*

Standard

Wide

Links

Standard

Orange

* Subscribers onlyLearn more

Browser extensions with more than 8 million installs are harvesting complete and extended conversations from users’ AI conversations and selling them for marketing purposes, according to data collected from the Google and Microsoft pages hosting them.

Security firm Koi discovered the eight extensions, which as of late Tuesday night remained available in both Google’s and Microsoft’s extension stores. Seven of them carry “Featured” badges, which are endorsements meant to signal that the companies have determined the extensions meet their quality standards. The free extensions provide functions such as VPN routing to safeguard online privacy and ad blocking for ad-free browsing. All provide assurances that user data remains anonymous and isn’t shared for purposes other than their described use.

A gold mine for marketers and data brokers

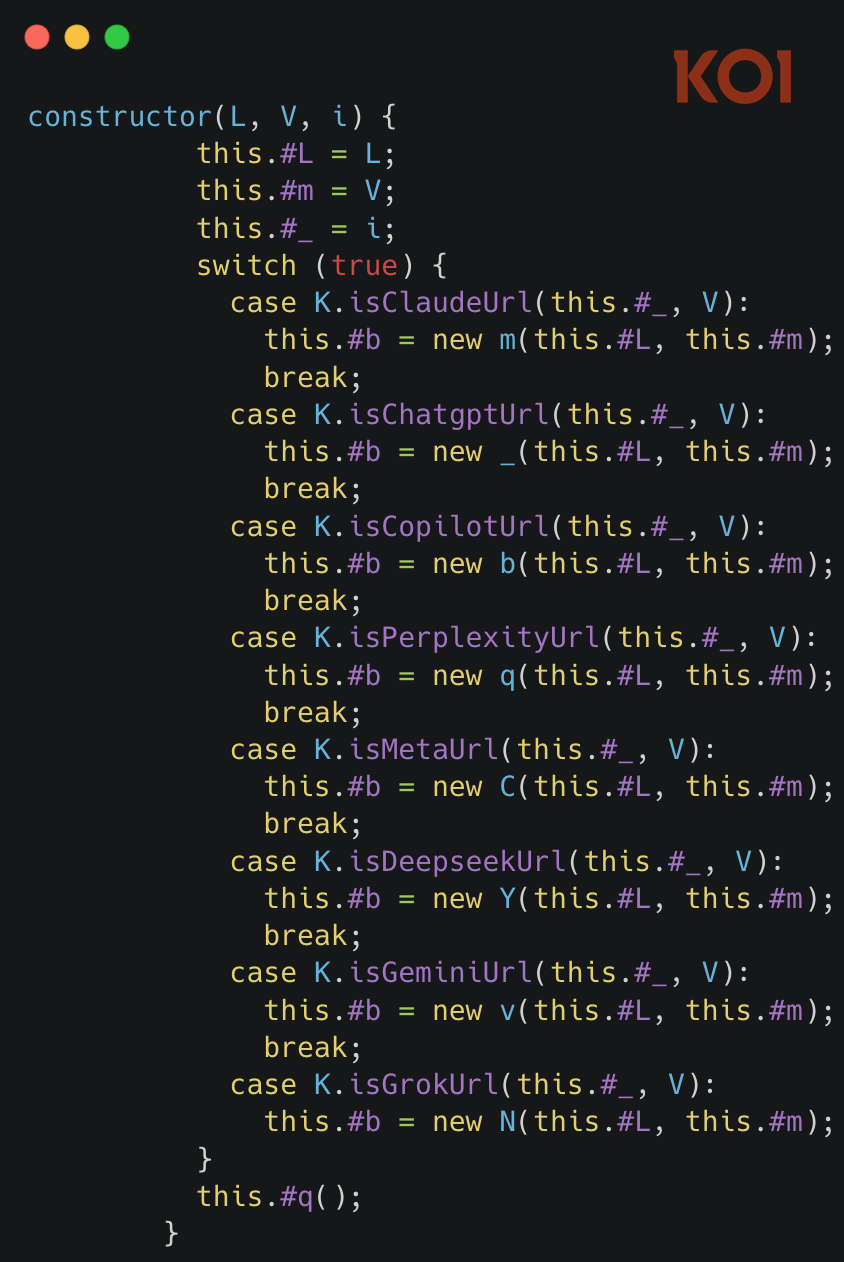

An examination of the extensions’ underlying code tells a much more complicated story. Each contains eight of what Koi calls “executor” scripts, with each being unique for ChatGPT, Claude, Gemini, and five other leading AI chat platforms. The scripts are injected into webpages anytime the user visits one of these platforms. From there, the scripts override browsers’ built-in functions for making network requests and receiving responses.

As a result, all interaction between the browser and the AI bots is routed not by the legitimate browser APIs—in this case fetch() and HttpRequest—but through the executor script. The extensions eventually compress the data and send it to endpoints belonging to the extension maker.

“By overriding the [browser APIs], the extension inserts itself into that flow and captures a copy of everything before the page even displays it,” Koi CTO Idan Dardikman wrote in an email. “The consequence: The extension sees your complete conversation in raw form—your prompts, the AI’s responses, timestamps, everything—and sends a copy to their servers.”

Besides ChatGPT, Claude, and Gemini, the extensions harvest all conversations from Copilot, Perplexity, DeepSeek, Grok, and Meta AI. Koi said the full description of the data captured includes:

- Every prompt a user sends to the AI

- Every response received

- Conversation identifiers and timestamps

- Session metadata

- The specific AI platform and model used

The executor script runs independently from the VPN networking, ad blocking, or other core functionality. That means that even when a user toggles off VPN networking, AI protection, ad blocking, or other functions, the conversation collection continues. The only way to stop the harvesting is to disable the extension in the browser settings or to uninstall it.

Koi said it first discovered the conversation harvesting in Urban VPN Proxy, a VPN routing extension that lists “AI protection” as one of its benefits. The data collection began in early July with the release of version 5.5.0.

“Anyone who used ChatGPT, Claude, Gemini, or the other targeted platforms while Urban VPN was installed after July 9, 2025 should assume those conversations are now on Urban VPN’s servers and have been shared with third parties,” the company said. “Medical questions, financial details, proprietary code, personal dilemmas—all of it, sold for ‘marketing analytics purposes.'”

Following that discovery, the security firm uncovered seven additional extensions with identical AI harvesting functionality. Four of the extensions are available in the Chrome Web Store. The other four are on the Edge add-ons page. Collectively, they have been installed more than 8 million times.

They are:

Chrome Store

- Urban VPN Proxy: 6 million users

- 1ClickVPN Proxy: 600,000 users

- Urban Browser Guard: 40,000 users

- Urban Ad Blocker: 10,000 users

Edge Add-ons:

- Urban VPN Proxy: 1,32 million users

- 1ClickVPN Proxy: 36,459 users

- Urban Browser Guard – 12,624 users

- Urban Ad Blocker – 6,476 users

Read the fine print

The extensions come with conflicting messages about how they handle bot conversations, which often contain deeply personal information about users’ physical and mental health, finances, personal relationships, and other sensitive information that could be a gold mine for marketers and data brokers. The Urban VPN Proxy in the Chrome Web Store, for instance, lists “AI protection” as a benefit. It goes on to say:

Our VPN provides added security features to help shield your browsing experience from phishing attempts, malware, intrusive ads and AI protection which checks prompts for personal data (like an email or phone number), checks AI chat responses for suspicious or unsafe links and displays a warning before click or submit your prompt.

On the privacy policy for the extension, Google says the developer has declared that user data isn’t sold to third parties outside of approved use cases and won’t be “used or transferred for purposes that are unrelated to the item’s core functionality.” The page goes on to list the personal data handled as location, web history, and website content.

Koi said that a consent prompt that the extensions display during setup notifies the user that they process “ChatAI communication,” “pages you visit,” and “security signals.” The notification goes on to say that the data is processed to “provide these protections,” which presumably means the core functions such as VPN routing or ad blocking.

The only explicit mention of AI conversations being harvested is in legalese buried in the privacy policy, such as this 6,000-word one for Urban VPN Proxy, posted on each extension website. There, it says that the extension will “collect the prompts and outputs queried by the End-User or generated by the AI chat provider, as applicable.” It goes on to say that the extension developer will “disclose the AI prompts for marketing analytics purposes.”

All eight extensions and the privacy policies covering them are developed and written by Urban Cyber Security, a company that says its apps and extensions are used by 100 million people. The policies say the extensions share “Web Browsing Data” with “our affiliated company,” which is listed as both BiScience and B.I Science. The affiliated company “uses this raw data and creates insights which are commercially used and shared with Business Partners.” The policy goes on to refer users to the BiScience privacy policy. BiScience, whose privacy practices have been scrutinized before, says its services “transform enormous volumes of digital signals into clear, actionable market intelligence.”

It’s hard to fathom how both Google and Microsoft would allow such extensions onto their platforms at all, let alone go out of their way to endorse seven of them with a featured badge. Neither company returned emails asking how they decide which extensions qualify for such a distinction, if they have plans to stop making them available to Chrome and Edge users, or why the privacy policies are so unclear to normal users.

Messages sent to both individual extension developers and Urban Cyber Security went unanswered. BiScience provides no email. A call to the company’s New York office was answered by someone who said they were in Israel and to call back during normal business hours in that country.

Koi’s discovery is the latest cautionary tale illustrating the growing perils of being online. It’s questionable in the first place whether people should trust their most intimate secrets and sensitive business information to AI chatbots, which come with no HIPAA assurances, attorney-client privilege, or expectations of privacy. Yet increasingly, that’s exactly what AI companies are encouraging, and users, it seems, are more than willing to comply.

Compounding the risk is the rush to install free apps and extensions—particularly those from little-known developers and providing at best minimal benefits—on devices storing and transmitting these chats. Taken together, they’re a recipe for disaster, and that’s exactly what we have here.

Dan Goodin

Senior Security Editor

Dan Goodin

Senior Security Editor

Dan Goodin

Senior Security Editor

Dan Goodin

Senior Security Editor